Systematic Approach to Automated Software Testing with Vertex Testing

- Mariusz Sieraczkiewicz

- Software development , Testing

- January 26, 2025

Table of Contents

Systematic approach to automated software testing with Vertex Testing

A lot has been said about automated software testing in software development. Although many patterns, practices, and strategies exist, it is still common to encounter complex, brittle, and hard-to-maintain test suites. In this article, we will explore the most common pitfalls of writing such tests and introduce a comprehensive, consistent strategy called Vertex Testing, which helps address these typical challenges.

Common Testing Pitfalls

Testing Scope Pitfall

A key challenge, especially in unit testing, is deciding what is truly worth testing. We have many options—should we test every method? Should we go as far as testing accessors? What exactly should be covered? Every function, every method, every procedure, or only selected ones?

Testing too much can make tests difficult to maintain and lead to redundancy. On the other hand, testing too little results in insufficient test coverage. This makes deciding what to test far from straightforward.

Many software developers blindly follow the idea that everything should be tested or that a test should always be written before any production code. But is blindly following such an approach truly beneficial?

Complex Data Setup Pitfall

One of the biggest challenges in testing is preparing data for meaningful tests. In any non-trivial system that has been developed for more than a few months, setting up the correct data—with proper dependencies and values—becomes increasingly difficult. Configuring numerous attributes and linking multiple values correctly is both time-consuming and complex. Additionally, complex test data is often hard to read, as analyzing existing tests requires reviewing numerous data properties. This also makes modifying tests during adjustments or refactoring particularly challenging.

To illustrate this issue, consider the following code snippet:

def "should upsert books with generated loan records"() {

given: "I have a library with a section for fiction books"

def libraryCreationDate = LocalDate.of(2021, 1, 1)

libraryDto.getCreationDate() >> libraryCreationDate

def sectionId = 1L

def bookId = 1L

def bookCode = "B101"

def book = createBook(bookId, sectionId, bookCode, libraryId, libraryCreationDate)

book.setCategories(["Fiction"].toSet())

bookFacade.getForLibrary(libraryId) >> [book]

and: "The library has a loan history phase with multiple loan events scheduled for the book"

def oneWeekLoan = TestLoanDtoFactory.createLoanPeriod([7, 14], 0)

def twoWeekLoan = TestLoanDtoFactory.createLoanPeriod([14, 21, 28], 0)

def specialLoan = TestLoanDtoFactory.createLoanPeriod([30, 60], 0)

def generalLoan = TestLoanDtoFactory.createLoanType("General Loan")

def extendedLoan = TestLoanDtoFactory.createExtendedLoan("Extended Loan")

def specialAccessLoan = TestLoanDtoFactory.createSpecialLoan("Special Access", "VIP", ["UID1", "UID2"])

def loanHistoryPhase = TestLoanDtoFactory.createPhase([

type : PhaseTypeDto.LOAN_HISTORY,

activities: [TestLoanDtoFactory.createActivity([

id : sectionId,

components: [

TestLoanDtoFactory.createLoanEvent([

id : 1,

sectionId : sectionId,

schedule : oneWeekLoan,

loans : [TestLoanDtoFactory.createLoan(generalLoan)]

]),

TestLoanDtoFactory.createLoanEvent([

id : 2,

sectionId : sectionId,

schedule : twoWeekLoan,

loans : [TestLoanDtoFactory.createLoan(extendedLoan)]

]),

TestLoanDtoFactory.createLoanEvent([

id : 3,

sectionId : sectionId,

schedule : specialLoan,

loans : [TestLoanDtoFactory.createLoan(specialAccessLoan)]

])

]

])]

])

loanFacade.getLoanHistoryForLibrary(libraryId, _, _, _) >> [loanHistoryPhase]

and: "I have a book entry that needs to be upserted into the catalog"

def books = [

TestBookDtoFactory.createBook(bookId)

]

and: "I have some view config"

def viewConfig = new ViewConfigDto(TimePointUnitDto.DAYS)

when: "I upsert this book"

bookCatalogFacade.upsert(books, libraryId, viewConfig)

then: "The external API is called to store the book entry"

1 * bookContextFacade.saveOrUpdateBooks(_) >> { List<List<BookDto>> payloads ->

def payload = payloads.get(0)

// There is only one book entry saved

assert payload.size() == 1

}

}

As seen in this example, we need to set up multiple interconnected objects before the test can be executed. This setup complexity arises because each test requires configuring numerous instances of different entities, ensuring they align correctly to make the test meaningful. This level of complexity can make tests harder to maintain and extend over time.

Mocks Usage Pitfall

Another challenge arises as our system becomes increasingly complex and we attempt to test almost everything. At some point, we end up relying heavily on mocks, often having to mock numerous dependencies. This becomes particularly problematic when considering maintainability.

When refactoring, changes to existing code can take a significant amount of time, often requiring updates to mock definitions. This is especially challenging because, in many cases, these mock definitions are highly specific to the particular test scenario. As a result, modifying tests becomes cumbersome and error-prone.

Consider the example below. A large number of mocks are set up at the beginning, and then they are modified or overridden in specific scenarios. This becomes particularly problematic in dynamic environments, where mocking is difficult to refactor and adjust to changes in feature implementations.

@SuppressWarnings('GroovyAssignabilityCheck')

def setup() {

userFacade = Mock()

bookViewRepository = Mock()

bookViewSettingsRepository = Mock()

bookFacade = Mock()

loanFacade = Mock()

bookContextFacade = Mock()

sectionFacade = Mock()

libraryFacade = Mock()

patronFacade = Mock()

libraryDto = Mock()

libraryDto.getId() >> libraryId

dictionaryFacade = Mock()

timePointFacade = new TimePointFacade()

libraryFacade.findById(_) >> libraryDto

libraryCatalogFacade = new LibraryFacadeTestConfiguration().libraryCatalogFacade(

bookContextFacade,

userFacade,

libraryDataProvider,

bookViewService,

bookViewSettingsRepository,

loanEventService,

Mock(BookExportService), // tested in a separate Spec

Mock(BookImportService) // tested in a separate Spec

)

}

Duplicating in Unit, Integration, and E2E Tests

It is very common to implement different types of tests independently, treating them as completely separate entities. However, general styles and structural patterns can be reused across different levels of testing while maintaining their unique characteristics. Each level of testing has its differentiators:

- Unit tests focus on domain edge cases.

- Integration tests verify interactions between modules and external components such as databases and REST APIs.

- End-to-end (E2E) tests focus on UI-specific behaviors.

What remains common across all these types of tests are core domain scenarios. Well-crafted domain scenarios can be shared between different types of tests, with additional cases specific to the type of test being executed.

Vertex Testing: A Unified Approach

Vertex Testing provides a balanced and coherent blend of well-known testing practices, ensuring consistency and maintainability across different test levels. It incorporates the following principles:

- BDD and Specification by Example – Using Behavior-Driven Development (BDD) to guide domain thinking and select appropriate unit test granularity.

- Focus on Use-Case Methods – Testing domain scenarios through dedicated use-case methods.

- Smart Builders – Using advanced builders to create complex domain objects with minimal custom configurations.

- Replacing Mocks with In-Memory Stubs – Using in-memory stubs instead of mocks to test external dependencies like databases, services, and messaging.

- Lightweight Application Object Factories – Simplifying the setup of application objects required for domain execution.

The core philosophy behind these practices is domain-oriented thinking, leveraging Domain-Driven Design (DDD) and the Ports and Adapters architecture to facilitate domain testing in isolation.

In the next sections, we will demonstrate how Vertex Testing addresses the common pitfalls discussed earlier.

Testing Domain Behaviors Instead of Testing Implementation Details

While testing domain behaviors is the default approach in acceptance testing, unit testing often lacks a clear strategy. A common practice in unit testing is to isolate and test every public method individually, frequently using mocks to replace external dependencies. This leads to a proliferation of single-purpose tests that focus heavily on implementation details rather than domain logic. As a result, maintaining these tests becomes challenging, as they are highly sensitive to changes in design, implementation, or refactoring.

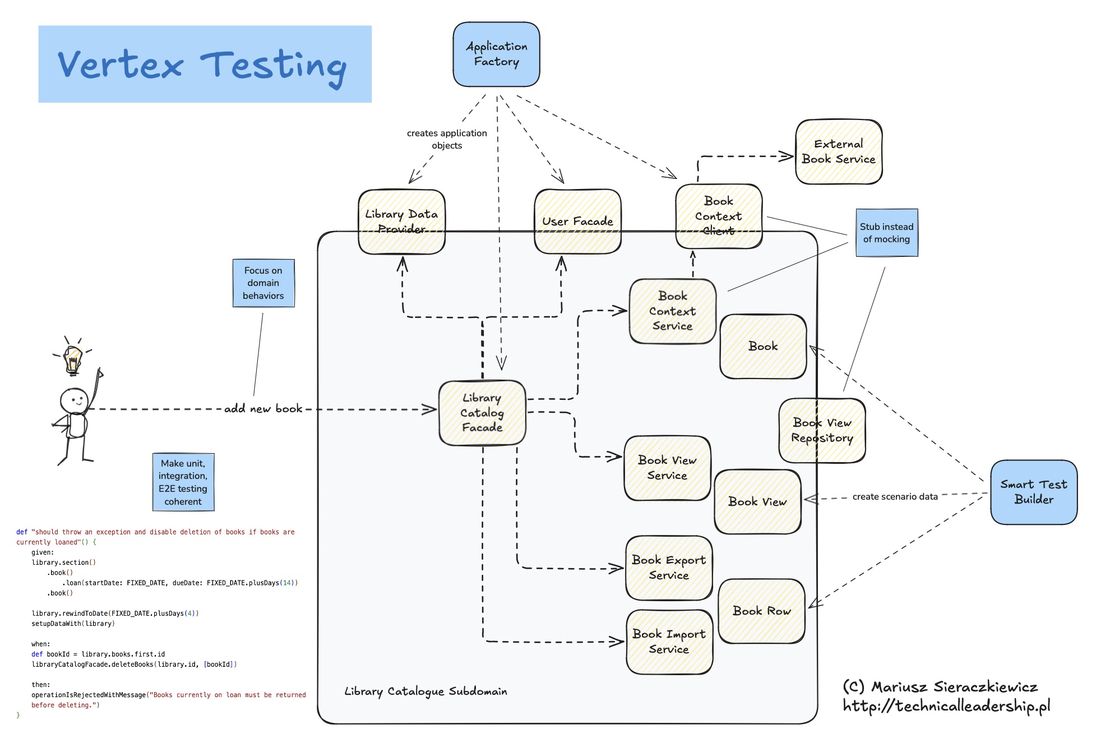

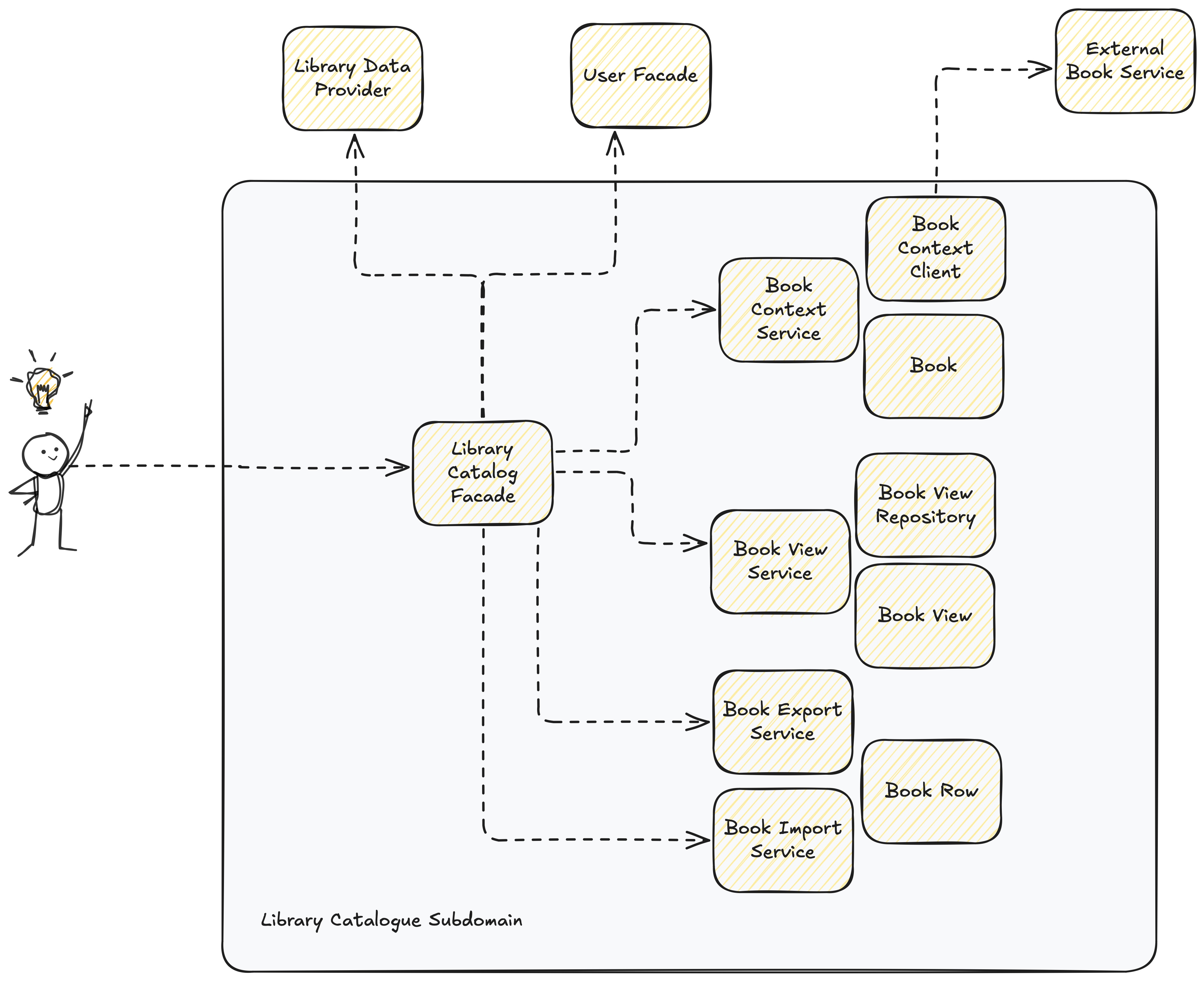

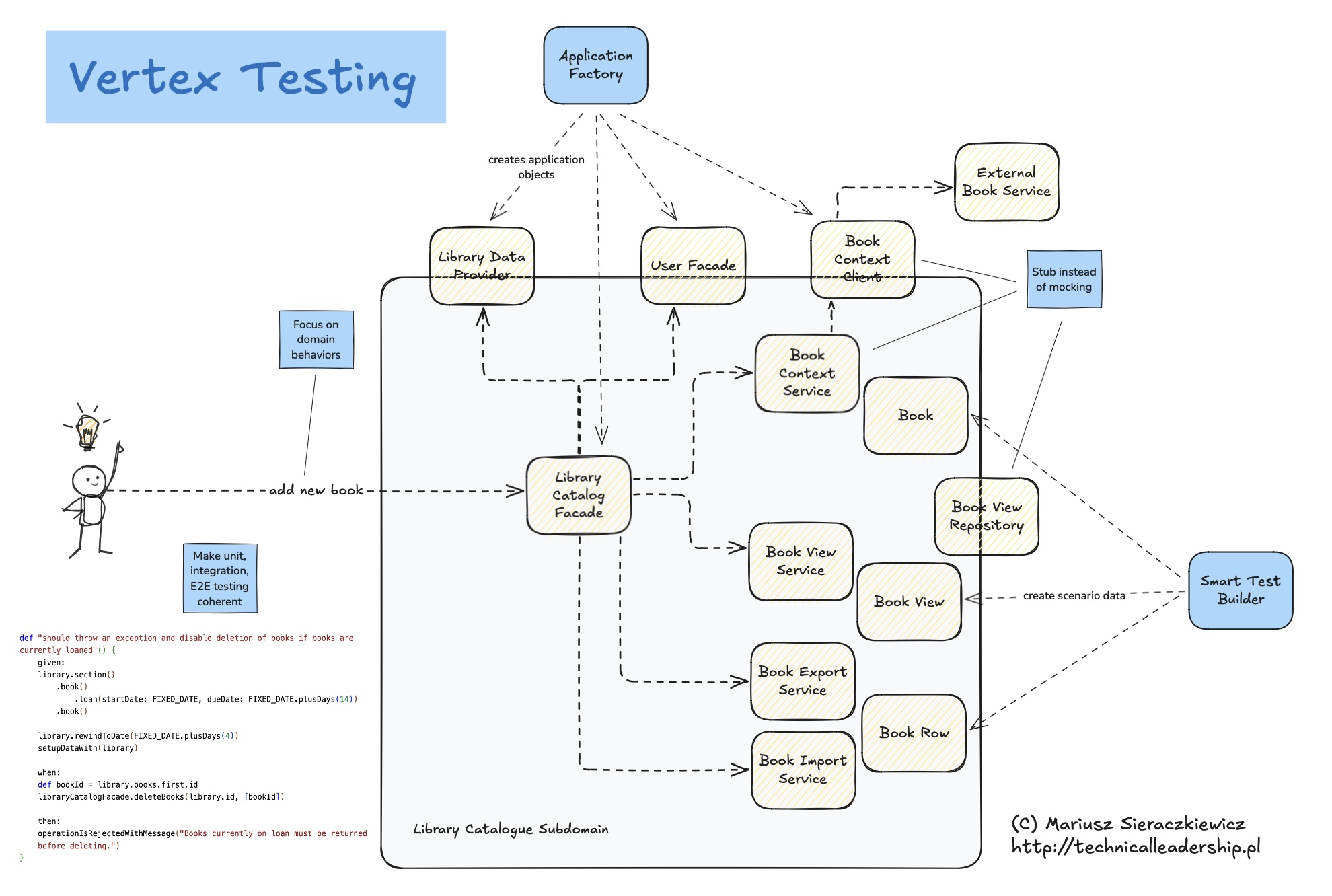

The diagram above represents a hypothetical library system with books, book view management, exporting, and importing book data.

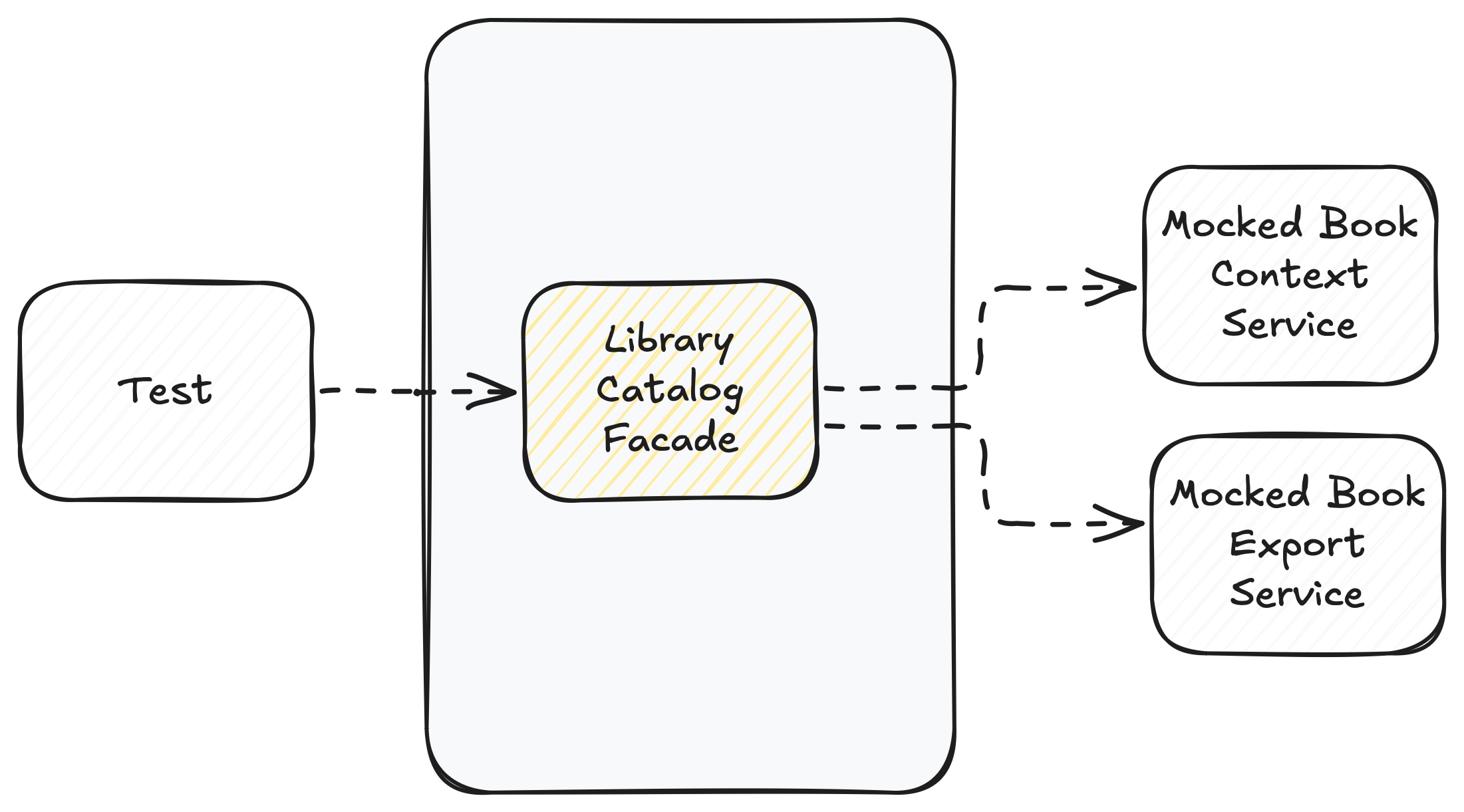

Overuse of Mocks and Method Isolation

A common unit testing practice involves preparing mocks for each scenario, injecting them into the tested class, and running individual method-based tests. This method-level isolation results in numerous small tests that validate very specific local design choices, as shown in the diagram below:

The problem with this approach is the sheer volume of customized mocks required for different scenarios. Each method needs its own setup, making tests more fragile and harder to maintain. Over time, the accumulation of such isolated tests results in a massive maintenance burden, slowing down development and refactoring efforts.

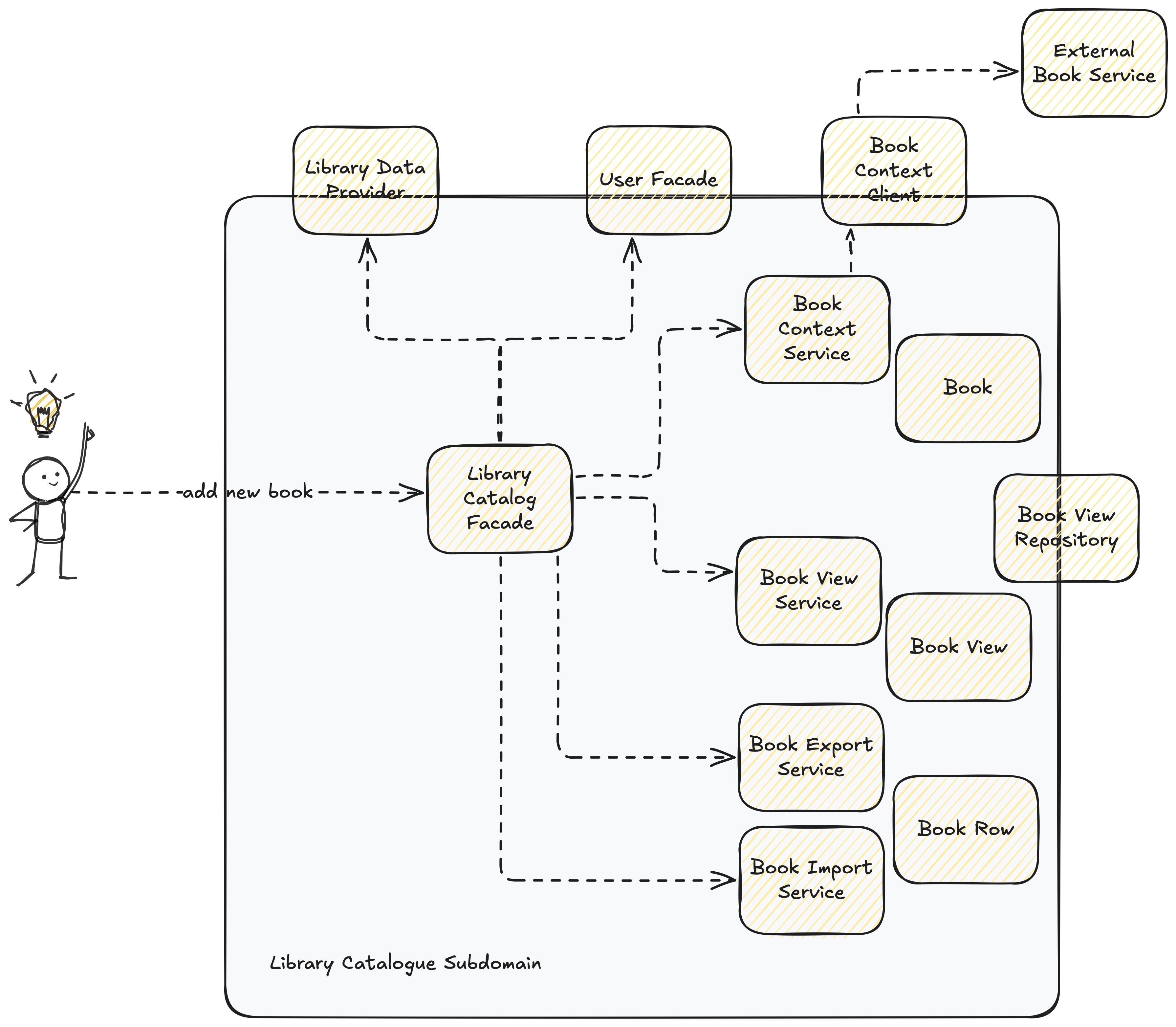

A Different Perspective: Testing Domain Scenarios

Instead of testing individual methods in isolation, a more pragmatic approach is to validate real domain scenarios—operations triggered by clients. In most systems, there is a structured application layer, such as a Business Layer Facade (in a layered architecture) or an Application Service (in Domain-Driven Design). These components encapsulate business logic and expose high-level operations that clients interact with. External components, such as REST controllers or other modules, should only communicate with a given module through its facade.

For example, in a library system, the Library Catalog Facade might expose operations such as:

- Adding a new book

- Updating a book

- Exporting selected books

- Importing new books

Each of these operations represents a domain scenario that should be tested holistically, ensuring the behavior aligns with business rules rather than focusing on internal method calls.

Structuring Tests with Ports & Adapters

A well-suited architecture for this testing approach is Ports & Adapters (Hexagonal Architecture), which separates domain logic from external dependencies. Even without adopting Ports & Adapters fully, a well-structured layered architecture can offer similar benefits. The key principle is to fully instantiate and execute the domain logic while mocking or stubbing only the external adapters (e.g., repositories, external service clients, and message publishers).

The diagram below illustrates how this architecture can be applied to a library system. Here, ports and adapters cross subdomain boundaries, ensuring that domain logic remains fully testable while external dependencies are abstracted away:

This way, unit tests focus on actual application behavior rather than specific method implementations, making them more resilient to changes and reducing the maintenance burden.

Using BDD and Specification by Example to Improve Test Focus

Behavior-Driven Development (BDD) and Specification by Example reinforce the focus on domain behavior rather than implementation details. While test structures such as Given/When/Then, Arrange/Act/Assert, or Setup/Execute/Verify are widely used, they are often applied mechanically. Instead, tests should be structured around concrete examples that accurately represent domain scenarios, reducing unnecessary complexity and improving clarity.

For example, consider the following precise scenario:

// given there is a book B

// and book B was loaned four days ago

// when book B is being deleted

// then deletion is rejected

This explicitly defines the test setup, operation, and expected result. In contrast, a more generic scenario like this provides less clarity:

// given there is a loaned book

// when loaned book is deleted

// then deletion is rejected

While both tests aim to validate the same behavior, the first version offers more concrete details, improving readability and test maintenance.

Another well-structured scenario covering deletion of a non-existing book:

// given there is a book B1 with ID: 0001

// and there is a book B2 with ID: 0002

// and there are no other books

// when book with ID: 20000 is deleted

// then deletion is rejected

// and reason of rejection is "Book with ID = 20000 does not exist, so cannot be removed"

This contrasts with a less specific version:

// given there are books in the library

// when a book with a non-existing ID is deleted

// then deletion is rejected

While the latter expresses a general domain rule, it lacks the specificity needed for an effective test scenario.

To improve test clarity and effectiveness, we should aim for:

- Minimal but sufficient setup in the Given section

- A clearly defined operation in the When section

- Precise and relevant assertions in the Then section

By focusing on domain-driven testing instead of method-level validation, tests become more stable, meaningful, and maintainable. This approach aligns tests with real application behavior, ensuring that they remain valuable even as the system evolves.

What is important here, BDD in Vertex Testing means a way of formulating automotated test scenarios focused on domain rather than using the whole BDD methodology with business writing scenarios by themselves.

Using Smart Builders Instead of Complex Data Setup

As systems mature, domain objects often become more complex, requiring significant effort to create test data for non-trivial scenarios. However, in many cases:

- Certain attribute values are not crucial for a specific scenario, as long as they are valid from a domain perspective.

- Many attributes have reasonable default values that apply to most test cases.

- Dependencies between objects allow some attributes to be derived automatically.

class LibraryCatalogFacadeSpec extends BaseLibraryCatalogFacadeSpec {

private SmartLibraryBuilder library;

private LibraryTestConfiguration configuration;

private LibraryCatalogFacade libraryCatalogFacade;

def setup() {

library = SmartLibraryBuilder.newLibrary();

configuration = new LibraryTestConfiguration();

libraryCatalogFacade = configuration.libraryCatalogFacade();

}

private static final LocalDate FIXED_DATE = LocalDate.of(2024, 1, 1);

def "should throw an exception and reject deletion of books if provided book IDs do not exist"() {

given:

library.section()

.book(id: "0001")

.book(id: "0002");

setupDataWith(library);

when:

libraryCatalogFacade.deleteBooks(library.id, ["20002"]); // Non-existing ID

then:

def exception = thrown LibraryException;

assert exception.message.startsWith("Books with provided IDs do not exist in the library: ");

}

}

As seen in the examples above, most attributes are unnecessary for setting up the required test scenario. Explicitly defining only the attributes that matter reduces cognitive load, making tests easier to write, understand, and maintain. Although writing a smart builder is not always straightforward, it pays off in the long run as it is reused across multiple tests, simplifying future test writing.

Using In-Memory Adapter Stubs Instead of Mocking

Dynamic mocks are a powerful tool for minimizing test scope and mimicking external dependencies. However, they come at a cost—mocks must be specifically configured for each scenario, and in dynamically interpreted languages, extensive refactoring is often required. This is mainly because mocks depend on implementation details, making tests brittle and tightly coupled to the internal structure of the system.

In Vertex Testing, real, fully instantiated objects are preferred, especially for the domain layer. Facades, application services, domain services, and business rules should be instantiated as regular objects rather than mocked. This approach reduces mock dependency in testing. However, when reaching the boundary of the domain, known as Adapters in Ports and Adapters architecture, different strategies must be employed.

Boundary adapters include repositories, external service clients, message publishers (e.g., Kafka, RabbitMQ, SNS), controllers, and event listeners. These components should not be instantiated directly in unit tests. Instead, in-memory implementations or simplified stubs should be used, ensuring reusable dependencies without requiring repeated configuration across tests.

Creating multiple objects for testing manually can be tedious. Instead, reusable factory classes should be used to instantiate application objects efficiently, keeping test setup code clean and maintainable.

@RequiredArgsConstructor

public class LibraryTestConfiguration {

private LibraryCatalogFacade libraryCatalogFacade;

private LibraryDataProvider libraryDataProvider;

private InMemoryUserFacade userFacade;

private BookContextService bookContextService;

private BookViewService bookViewService;

private InMemoryBookContextClient bookContextClient;

private InMemoryBookViewRepository bookViewRepository;

private BookExportService bookExportService;

private BookImportService bookImportService;

public LibraryCatalogFacade libraryCatalogFacade() {

if (this.libraryCatalogFacade == null) {

this.libraryCatalogFacade = new LibraryCatalogFacade(

userFacade(),

bookContextService(),

libraryDataProvider(),

bookViewService(),

bookViewRepository(),

bookContextClient(),

bookExportService(),

bookImportService()

);

}

return this.libraryCatalogFacade;

}

public LibraryDataProvider libraryDataProvider() {

if (this.libraryDataProvider == null) {

this.libraryDataProvider = new LibraryDataProvider();

}

return this.libraryDataProvider;

}

public InMemoryUserFacade userFacade() {

if (this.userFacade == null) {

this.userFacade = new InMemoryUserFacade();

}

return this.userFacade;

}

public BookContextService bookContextService() {

if (this.bookContextService == null) {

this.bookContextService = new BookContextService(bookContextClient());

}

return this.bookContextService;

}

public BookViewService bookViewService() {

if (this.bookViewService == null) {

this.bookViewService = new BookViewService(bookViewRepository(), libraryDataProvider());

}

return this.bookViewService;

}

public InMemoryBookContextClient bookContextClient() {

if (this.bookContextClient == null) {

this.bookContextClient = new InMemoryBookContextClient();

}

return this.bookContextClient;

}

public InMemoryBookViewRepository bookViewRepository() {

if (this.bookViewRepository == null) {

this.bookViewRepository = new InMemoryBookViewRepository();

}

return this.bookViewRepository;

}

public BookExportService bookExportService() {

if (this.bookExportService == null) {

this.bookExportService = new BookExportService();

}

return this.bookExportService;

}

public BookImportService bookImportService() {

if (this.bookImportService == null) {

this.bookImportService = new BookImportService();

}

return this.bookImportService;

}

public void setup(SmartLibraryBuilder library) {

var libraryDataProvider = libraryDataProvider();

libraryDataProvider.libraryFacade.save(library.getLibrary());

libraryDataProvider.bookFacade.save(library.getBooks());

libraryDataProvider.sectionFacade.save(library.getSections());

var repository = bookViewRepository();

repository.save(library.getBooks());

}

}

The factory implementation above provides a fully configured LibraryCatalogFacade with real dependent class instances and in-memory implementations for repositories and external service clients. The LibraryTestConfiguration.setup method allows domain data to be initialized using the Smart Test Builder, ensuring efficient and clean test setup.

class LibraryCatalogFacadeSpec extends BaseLibraryCatalogFacadeSpec {

private SmartLibraryBuilder library;

private LibraryTestConfiguration configuration;

private LibraryCatalogFacade libraryCatalogFacade;

def setup() {

library = SmartLibraryBuilder.newLibrary();

configuration = new LibraryTestConfiguration();

libraryCatalogFacade = configuration.libraryCatalogFacade();

}

By using smart builders and in-memory adapters, tests focus on domain behaviors rather than implementation details. This approach minimizes the maintenance burden of mock configurations while improving test clarity and resilience.

BDD Spec by Example, Smart Test Builder, and Application Test Factories Instead of Duplicating Implementations in Unit, Integration, and E2E Tests

All Vertex Testing techniques provide an additional advantage—when applied consistently, they allow for a unified approach to testing across different levels. By leveraging BDD, Specification by Example, and focusing on domain scenarios, many tests—whether unit, integration, or end-to-end—can be expressed in the same way, reducing duplication and improving clarity.

Smart Test Builders and Application Test Factories can be adjusted to handle implementation details specific to each test level. For example:

- Unit Tests: Builders provide in-memory representations and stub dependencies.

- Integration Tests: Factories leverage actual infrastructure, such as databases or external service stubs.

- End-to-End Tests: Builders configure the necessary scenario setup via UI interactions rather than directly manipulating data.

The setup method plays a key role as the central point where common test scenario data is defined and applied in the correct way for the given test type. In integration tests, this might involve saving data in a real database and retrieving valid IDs for object relationships, while in end-to-end tests, it could mean simulating UI interactions to prepare the system for a test.

Of course, there are still cases where tests must be specialized for a particular level—for example, UI interactions that have no direct domain effect or technical infrastructure tests specific to integration testing. However, maintaining a common approach across all levels keeps implementation consistent, lowers cognitive load, and makes writing and maintaining tests a smoother experience.

def "should throw an exception and disable deletion of books if books are currently loaned"() {

given:

library.section()

.book()

.loan(startDate: FIXED_DATE, dueDate: FIXED_DATE.plusDays(14))

.book()

library.rewindToDate(FIXED_DATE.plusDays(4))

configuration.setup(library)

when:

def bookId = library.books.first.id

libraryCatalogFacade.deleteBooks(library.id, [bookId])

then:

operationIsRejectedWithMessage("Books currently on loan must be returned before deleting.")

}

Summary

To wrap things up, Vertex Testing brings together different techniques to solve common problems in test design. Instead of getting lost in the details of method-level tests, we focus on domain behaviors, ensuring that our tests reflect how the system is actually used.

The diagram below gives a high-level overview of how everything fits together:

The key takeaways of Vertex Testing include:

- BDD and Specification by Example keep tests readable and aligned with real-world use cases.

- Smart Test Builders simplify test setup by allowing developers to focus only on relevant attributes.

- Application Test Factories ensure a clean, reusable way to create application objects at different test levels.

- In-Memory Stubs replace excessive mocking, making tests more robust and easier to maintain.

By sticking to these principles, unit, integration, and end-to-end tests stay consistent, clear, and focused on real application behavior rather than implementation details.

Appendix: Key Terms

Ports and Adapters (Hexagonal Architecture)

A software architecture pattern that separates the core domain logic from external dependencies, such as databases, APIs, and user interfaces. It enables testing the core logic in isolation and allows flexibility in replacing external components. Read more

Domain-Driven Design (DDD)

An approach to software development that emphasizes modeling business domains using real-world concepts and structuring code around domain logic rather than technical details. Read more

Application Service

A service layer that contains application logic and orchestrates domain operations. It typically does not contain business rules but coordinates them across domain entities. Read more

Facade Pattern

A structural design pattern that provides a simplified interface to a complex subsystem. It is commonly used to expose domain logic through a single point of entry. Read more

Builder Pattern

A creational design pattern used to construct complex objects step by step. It allows defining object creation in a flexible and readable manner, which is particularly useful for setting up test data. Read more